Welcome back to our ML interview series!

After covering Linear Regression and Logistic Regression, it’s time to dive into Decision Trees—a fundamental machine learning algorithm that powers many real-world applications. Whether you're preparing for an ML interview or building robust ML systems, a strong grasp of Decision Trees is crucial.

In this post, we'll explore:

✅ The core principles of Decision Trees

✅ Key concepts: Splitting criteria (Gini & Entropy), pruning, and controlling overfitting

✅ Real-world challenges in applying Decision Trees

✅ System design implications

✅ A set of focused interview questions with detailed solutions

1️⃣Conceptual Understanding: The Logic of Decisions

What is a Decision Tree?

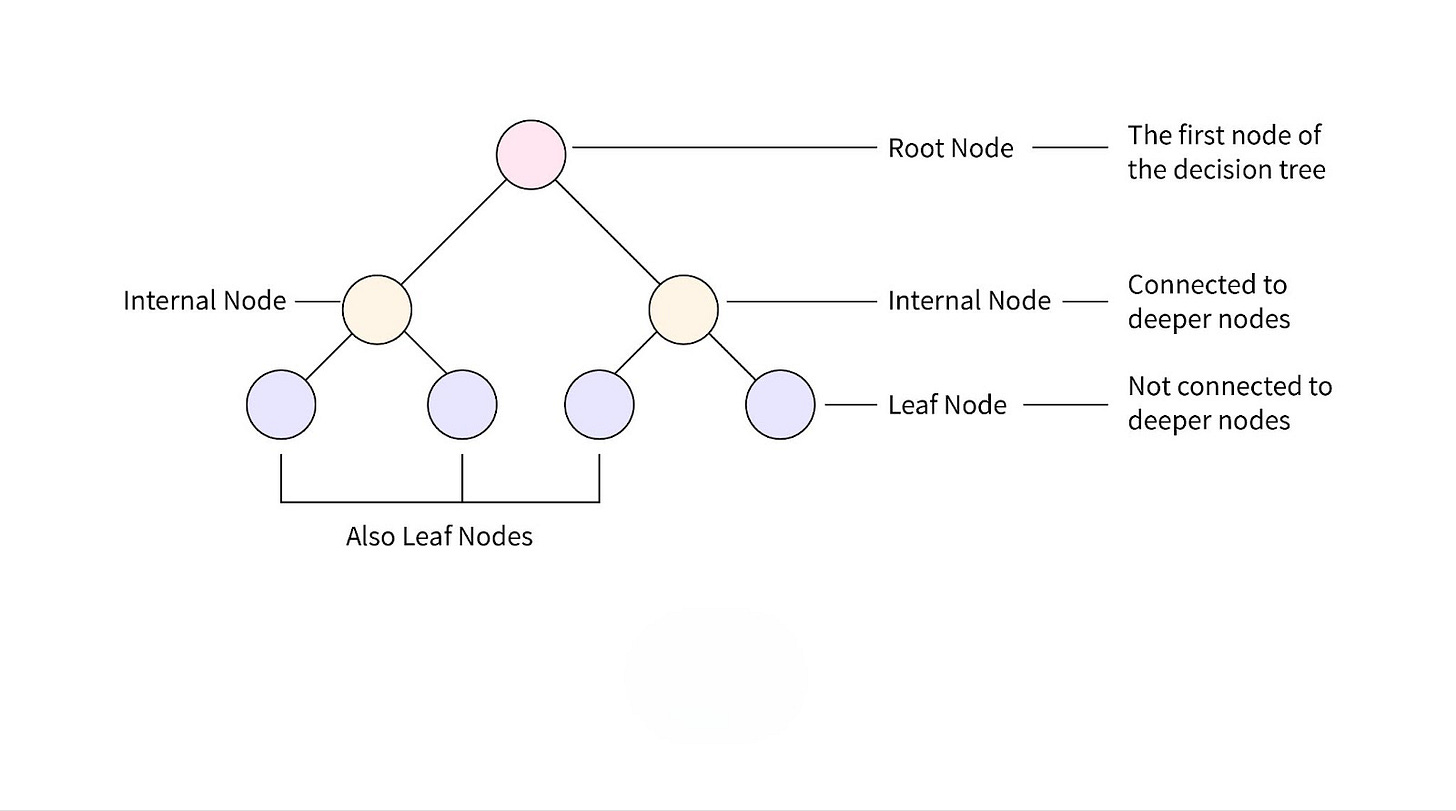

A Decision Tree is a supervised learning model that makes predictions by recursively splitting data into smaller groups based on feature values. It consists of:

Root Node: The starting point where data is split.

Internal Nodes: Decision points that evaluate conditions on features.

Leaf Nodes: The final output (a class label for classification, or a numerical value for regression).

How Does a Decision Tree Make Decisions?

For a given dataset with features X and target variable Y, the tree learns hierarchical decision rules that reduce uncertainty in predicting Y.

At each step, the algorithm selects the best feature to split on based on impurity measures such as Gini Impurity and Entropy.

Key Concepts in Decision Trees

1. Splitting Criteria: How Does a Tree Choose the Best Split?

At each decision node, the tree evaluates which feature to split on and at what threshold. The goal is to create the purest possible child nodes.

Gini Impurity

Measures the probability of misclassifying a randomly chosen data point. A lower Gini Impurity means a more homogeneous split.

where pi is the proportion of samples belonging to class i in the node.

Entropy & Information Gain

Entropy quantifies uncertainty in a node. Lower entropy indicates purer groups.

Information Gain (IG) measures the entropy reduction after a split:

Gini vs. Entropy: Gini is computationally faster, while Entropy has stronger theoretical grounding.

2. Pruning: Controlling Overfitting

Pruning is a key technique to prevent Decision Trees from overfitting the training data.

Pre-Pruning (Early Stopping): Stop tree growth early based on predefined conditions (e.g., max depth, minimum samples per node).

Post-Pruning (Cost-Complexity Pruning): First grow the full tree, then remove branches that don’t improve validation performance.

3. Handling Overfitting

To ensure generalization:

Limit tree depth

Set minimum samples per split

Use pruning techniques

Consider ensemble methods (like Random Forest)

2️⃣ Applied Perspective: Decision Trees in the Real World

Where Are Decision Trees Used?

Finance: Credit risk modeling, loan approval decisions

Healthcare: Diagnosing diseases, predicting patient outcomes

E-commerce: Product recommendations, customer segmentation

Manufacturing: Defect detection, quality control

Challenges When Using Decision Trees

Handling Missing Values: Can be addressed via imputation (mean/median/mode) or surrogate splits.

Dealing with Imbalanced Data: Use class weighting, oversampling, or undersampling.

Feature Importance: Decision Trees provide built-in feature importance metrics.

Continuous vs. Categorical Features: Decision Trees can handle both, but categorical features may need encoding.

3️⃣System Design Angle: Decision Trees in ML Systems

When Should You Use Decision Trees in Production?

When interpretability is a priority

When the dataset is relatively small

When handling both numerical and categorical data

Challenges in Production

Model Drift: Trees must be periodically retrained as data distributions change

Scalability: Deep trees can be computationally expensive.

Sensitivity to Data Variability: Small changes in data can lead to vastly different trees.

Interview Questions

1️⃣ How do Decision Trees handle categorical vs. continuous features?

2️⃣ What is the role of entropy and information gain in a Decision Tree?

3️⃣ Why might a Decision Tree overfit the training data, and how can you prevent it?

4️⃣ What are the advantages and disadvantages of using Gini Impurity vs. Entropy?

5️⃣ How do Decision Trees handle missing values?

6️⃣ Why are Decision Trees prone to instability, and how does it affect model performance?

7️⃣ If you have a Decision Tree that performs well on training data but poorly on test data, what steps would you take to improve it?

8️⃣ How does pruning work in Decision Trees, and what are the different types of pruning?

9️⃣ Do Decision Trees require feature scaling? Why or why not?

🔟 How do Decision Trees determine feature importance, and what are some limitations of this approach?

Solutions

1️⃣ How do Decision Trees handle categorical vs. continuous features?

Answer:

Categorical Features:

Decision Trees split categorical features by evaluating different ways to group categories and selecting the split that maximizes information gain or minimizes Gini Impurity.

Some implementations (e.g., CART) require categorical features to be one-hot encoded, while others (e.g., ID3, C4.5) can handle them natively.

Continuous Features:

The tree finds an optimal threshold (e.g., X>5X > 5) for splitting continuous variables.

It evaluates different split points and selects the one that minimizes impurity.

2️⃣ What is the role of entropy and information gain in a Decision Tree?

Answer:

Entropy measures the disorder in a set of labels:

A pure node (all samples belong to one class) has entropy = 0.

A node with equal class distribution has maximum entropy.

Information Gain (IG) is the reduction in entropy after a split:

The tree selects the feature and threshold that maximizes IG.

3️⃣ Why might a Decision Tree overfit the training data, and how can you prevent it?

Answer:

Overfitting happens when the tree learns noise instead of general patterns.

Ways to prevent overfitting:

Limit Tree Depth: Restrict the max depth to prevent deep splits.

Min Samples per Leaf: Require a minimum number of samples per leaf.

Pre-pruning: Stop growth early if further splits don’t significantly improve performance.

Post-pruning: Grow the full tree and prune weak branches using cross-validation.

4️⃣ What are the advantages and disadvantages of using Gini Impurity vs. Entropy?

Answer:

Criterion Advantages Disadvantages Gini Impurity Faster computation Less theoretically interpretable Entropy Strong theoretical foundation Slightly slower due to logarithms

Gini tends to favor dominant classes, while Entropy provides a smoother decision boundary.

In practice, both yield similar results, but Gini is preferred for large datasets due to efficiency.

5️⃣ How do Decision Trees handle missing values?

Answer:

Imputation: Fill missing values with mean, median, or mode.

Surrogate Splits: Some Decision Trees (e.g., C4.5) find an alternative feature to make a similar split when values are missing.

Ignoring Missing Values: Some implementations simply ignore missing values while splitting.

6️⃣ Why are Decision Trees prone to instability, and how does it affect model performance?

Answer:

Decision Trees are high-variance models, meaning small changes in training data can drastically alter the tree structure.

A single tree may not generalize well, which is why ensemble methods like Bagging (Random Forest) and Boosting (XGBoost) are often used.

7️⃣ If you have a Decision Tree that performs well on training data but poorly on test data, what steps would you take to improve it?

Answer:

Check Overfitting: If the tree is too deep, it may memorize training data. Use pruning.

Use More Training Data: Helps the tree learn general patterns instead of noise.

Apply Ensemble Methods: Random Forests and Gradient Boosting improve generalization.

Feature Engineering: Remove irrelevant or redundant features.

Use Different Splitting Criteria: If using Gini, try Entropy, and vice versa.

8️⃣ How does pruning work in Decision Trees, and what are the different types of pruning?

Answer:

Pre-pruning: Stops tree growth early (e.g., max depth, min samples per split).

Post-pruning: Grows the full tree, then removes branches that don’t improve validation set performance.

Post-pruning often gives better results since it allows the model to grow freely before reducing complexity.

9️⃣ Do Decision Trees require feature scaling? Why or why not?

Answer:

No, Decision Trees do not require feature scaling.

Unlike linear models, Decision Trees do not use distance-based calculations.

Splitting is based on feature values and not their magnitudes, so scaling has no impact.

🔟 How do Decision Trees determine feature importance, and what are some limitations of this approach?

Answer:

Feature importance is based on how much impurity is reduced by splits on that feature.

A feature with high importance means it contributes significantly to reducing uncertainty.

Limitations:

Biased Importance: Features with more possible split points (e.g., continuous variables) tend to appear more important.

Correlated Features: If two features are highly correlated, one may appear very important while the other seems useless.

What’s Next?

In our next post, we’ll dive into Ensemble Methods, which take Decision Trees to the next level. We'll explore Bagging, Boosting, and Random Forests, breaking down how they improve performance, reduce overfitting, and enhance model stability. Stay tuned for more !

References & Further Reading

📖 Decision Trees and Pruning - GeeksforGeeks

📖 Feature Importance in Decision Trees - Analytics Vidhya

📖 Bias-Variance Tradeoff - Stanford ML Course

Well explained!

Looking forward for more such posts